There may be 10 definitions for the question of what is the cloud as in cloud computing

Most of the will agree that “on-demand” and “pay-per-use” are its key characteristics, and all would use Amazon Web Services as an example of the cloud. So it’s really confusing when people say MapReduce is a cloud technology, because MapReduce is not associated with “on-demand” or “pay-per-use”.

- It is faster. In one case, it is 60 times faster than Hadoop (Actual speedup depends on the application and the input data).

- It is more scalable. It has a fully distributed architecture, so there is no single point of bottleneck.

- It is more fault tolerant. Again due to its fully distributed architecture, it has no single point of failure.

- It is dramatically simpler. It has only 3,000 lines of code, two orders of magnitude smaller than Hadoop.

MapReduce libraries have been written in many programming languages, with different levels of optimization. A popular open-source implementation is Apache Hadoop. The name MapReduce originally referred to the proprietary Google technology but has since been genericized.

Overview

‘MapReduce’ is a framework for processing parallelizable problems across huge datasets using a large number of computers (nodes), collectively referred to as a cluster (if all nodes are on the same local network and use similar hardware) or a grid (if the nodes are shared across geographically and administratively distributed systems, and use more heterogenous hardware). Computational processing can occur on data stored either in a filesystem (unstructured) or in a database (structured). MapReduce can take advantage of locality of data, processing data on or near the storage assets to decrease transmission of data.

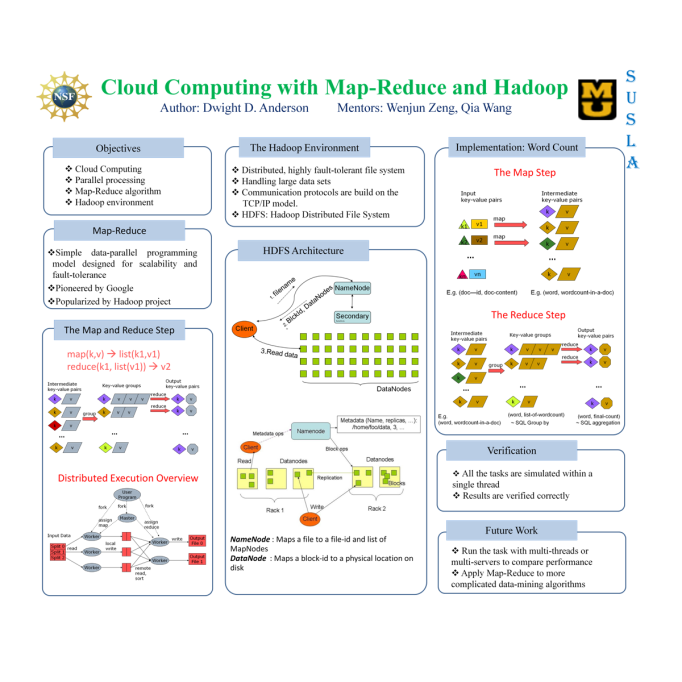

“Map” step: The master node takes the input, divides it into smaller sub-problems, and distributes them to worker nodes. A worker node may do this again in turn, leading to a multi-level tree structure. The worker node processes the smaller problem, and passes the answer back to its master node.

“Reduce” step: The master node then collects the answers to all the sub-problems and combines them in some way to form the output – the answer to the problem it was originally trying to solve.

MapReduce allows for distributed processing of the map and reduction operations. Provided that each mapping operation is independent of the others, all maps can be performed in parallel – though in practice this is limited by the number of independent data sources and/or the number of CPUs near each source. Similarly, a set of ‘reducers’ can perform the reduction phase, provided that all outputs of the map operation that share the same key are presented to the same reducer at the same time, or that the reduction function is associative. While this process can often appear inefficient compared to algorithms that are more sequential, MapReduce can be applied to significantly larger datasets than “commodity” servers can handle – a large server farm can use MapReduce to sort a petabyte of data in only a few hours. The parallelism also offers some possibility of recovering from partial failure of servers or storage during the operation: if one mapper or reducer fails, the work can be rescheduled – assuming the input data is still available.

Another way to look at MapReduce is as a 5-step parallel and distributed computation:

Prepare the Map() input – the “MapReduce system” designates Map processors, assigns the K1 input key value each processor would work on, and provides that processor with all the input data associated with that key value.

Run the user-provided Map() code – Map() is run exactly once for each K1 key value, generating output organized by key values K2.

“Shuffle” the Map output to the Reduce processors – the MapReduce system designates Reduce processors, assigns the K2 key value each processor would work on, and provides that processor with all the Map-generated data associated with that key value.

Run the user-provided Reduce() code – Reduce() is run exactly once for each K2 key value produced by the Map step.

Produce the final output – the MapReduce system collects all the Reduce output, and sorts it by K2 to produce the final outcome.

Logically these 5 steps can be thought of as running in sequence – each step starts only after the previous step is completed – though in practice, of course, they can be intertwined, as long as the final result is not affected.

In many situations the input data might already be distributed (“sharded”) among many different servers, in which case step 1 could sometimes be greatly simplified by assigning Map servers that would process the locally present input data. Similarly, step 3 could sometimes be sped up by assigning Reduce processors that are as much as possible local to the Map-generated data they need to process.

Logical view

The Map and Reduce functions of MapReduce are both defined with respect to data structured in (key, value) pairs. Map takes one pair of data with a type in one data domain, and returns a list of pairs in a different domain:

Map(k1,v1) → list(k2,v2)

The Map function is applied in parallel to every pair in the input dataset. This produces a list of pairs for each call. After that, the MapReduce framework collects all pairs with the same key from all lists and groups them together, creating one group for each key.

The Reduce function is then applied in parallel to each group, which in turn produces a collection of values in the same domain:

Reduce(k2, list (v2)) → list(v3)

Each Reduce call typically produces either one value v3 or an empty return, though one call is allowed to return more than one value. The returns of all calls are collected as the desired result list.

Thus the MapReduce framework transforms a list of (key, value) pairs into a list of values. This behavior is different from the typical functional programming map and reduce combination, which accepts a list of arbitrary values and returns one single value that combines all the values returned by map.

It is necessary but not sufficient to have implementations of the map and reduce abstractions in order to implement MapReduce. Distributed implementations of MapReduce require a means of connecting the processes performing the Map and Reduce phases. This may be a distributed file system. Other options are possible, such as direct streaming from mappers to reducers, or for the mapping processors to serve up their results to reducers that query them.

As another example, imagine that for a database of 1.1 billion people, one would like to compute the average number of social contacts a person has according to age. In SQL such a query could be expressed as:

SELECT age, AVG(contacts)

FROM social.person

GROUP BY age

ORDER BY age

Using MapReduce, the K1 key values could be the integers 1 through 1,100, each representing a batch of 1 million records, the K2 key value could be a person’s age in years, and this computation could be achieved using the following functions:

function Map is

input: integer K1 between 1 and 1100, representing a batch of 1 million social.person records

for each social.person record in the K1 batch do

let Y be the person’s age

let N be the number of contacts the person has

produce one output record (Y,(N,1))

repeat

end function

function Reduce is

input: age (in years) Y

for each input record (Y,(N,C)) do

Accumulate in S the sum of N*C

Accumulate in Cnew the sum of C

repeat

let A be S/Cnew

produce one output record (Y,(A,Cnew))

end function

The MapReduce System would line up the 1,100 Map processors, and would provide each with its corresponding 1 million input records. The Map step would produce 1.1 billion (Y,(N,1)) records, with Y values ranging between, say, 8 and 103. The MapReduce System would then line up the 96 Reduce processors by performing shuffling operation of the key/value pairs due to the fact that we need average per age, and provide each with its millions of corresponding input records. The Reduce step would result in the much reduced set of only 96 output records (Y,A), which would be put in the final result file, sorted by Y.

The count info in the record is important if the processing is reduced more than one time. If we don’t add the count of the records, the computed average would be wrong, for example:

— map output #1: age, quantity of contacts

10, 9

10, 9

10, 9

— map output #2: age, quantity of contacts

10, 9

10, 9

— map output #3: age, quantity of contacts

10, 10

If we reduce files #1 and #2, we will have a new file with an average of 9 contacts for a 10 year old person ((9+9+9+9+9)/5):

— reduce step #1: age, average of contacts

10, 9

If we reduce it with file #3, we lost the count of how many records we’ve already seen, so we would end up with an average of 9.5 contacts for a 10 year old person ((9+10)/2), which is wrong. The correct answer is 9.17 ((9+9+9+9+9+10)/6).

Dataflow

The frozen part of the MapReduce framework is a large distributed sort. The hot spots, which the application defines, are:

an input reader

a Map function

a partition function

a compare function

a Reduce function

an output writer

Input reader

The input reader divides the input into appropriate size ‘splits’ (in practice typically 16 MB to 128 MB) and the framework assigns one split to each Map function. The input reader reads data from stable storage (typically a distributed file system) and generates key/value pairs.

A common example will read a directory full of text files and return each line as a record.

Map function

The Map function takes a series of key/value pairs, processes each, and generates zero or more output key/value pairs. The input and output types of the map can be (and often are) different from each other.

If the application is doing a word count, the map function would break the line into words and output a key/value pair for each word. Each output pair would contain the word as the key and the number of instances of that word in the line as the value.

Partition function

Each Map function output is allocated to a particular reducer by the application’s partition function for sharing purposes. The partition function is given the key and the number of reducers and returns the index of the desired reducer.

A typical default is to hash the key and use the hash value modulo the number of reducers. It is important to pick a partition function that gives an approximately uniform distribution of data per shard for load-balancing purposes, otherwise the MapReduce operation can be held up waiting for slow reducers (reducers assigned more than their share of data) to finish.

Between the map and reduce stages, the data is shuffled (parallel-sorted / exchanged between nodes) in order to move the data from the map node that produced it to the shard in which it will be reduced. The shuffle can sometimes take longer than the computation time depending on network bandwidth, CPU speeds, data produced and time taken by map and reduce computations.

Comparison function

The input for each Reduce is pulled from the machine where the Map ran and sorted using the application’s comparison function.

Reduce function

The framework calls the application’s Reduce function once for each unique key in the sorted order. The Reduce can iterate through the values that are associated with that key and produce zero or more outputs.

In the word count example, the Reduce function takes the input values, sums them and generates a single output of the word and the final sum.

Output writer

The Output Writer writes the output of the Reduce to the stable storage, usually a distributed file system.

Performance considerations

MapReduce programs are not guaranteed to be fast. The main benefit of this programming model is to exploit the optimized shuffle operation of the platform, and only having to write the Map and Reduce parts of the program. In practise, the author of a MapReduce program however has to nevertheless take the shuffle step into consideration; in particular the partition function and the amount of data written by the Map function can have a large impact on the performance. Additional modules such as the Combiner function can help to reduce the amount of data written to disk, and transmitted over the network.

When designing a MapReduce algorithm, the author needs to choose a good tradeoff[6] between the computation and the communication costs. Communication cost often dominates the computation cost,[6] and many MapReduce implementations are designed to write all communication to distributed storage for crash recovery.

For processes that complete fast, and where the data fits into main memory of a single machine or a small cluster, using a MapReduce framework usually is not effective: since these frameworks are designed to recover from the loss of whole nodes during the computation, they write interim results to distributed storage. This crash recovery is expensive, and only pays off when the computation involves many computers and a long runtime of the computation – a task that completes in seconds can just be restarted in the case of an error; and the likelihood of at least one machine failing grows quickly with the cluster size. On such problems, implementations keeping all data in memory and simply restarting a computation on node failures, or – when the data is small enough – non-distributed solutions will often be faster than a MapReduce system.

Distribution and reliability

MapReduce achieves reliability by parceling out a number of operations on the set of data to each node in the network. Each node is expected to report back periodically with completed work and status updates. If a node falls silent for longer than that interval, the master node (similar to the master server in the Google File System) records the node as dead and sends out the node’s assigned work to other nodes. Individual operations use atomic operations for naming file outputs as a check to ensure that there are not parallel conflicting threads running. When files are renamed, it is possible to also copy them to another name in addition to the name of the task (allowing for side-effects).

The reduce operations operate much the same way. Because of their inferior properties with regard to parallel operations, the master node attempts to schedule reduce operations on the same node, or in the same rack as the node holding the data being operated on. This property is desirable as it conserves bandwidth across the backbone network of the datacenter.

Implementations are not necessarily highly reliable. For example, in older versions of Hadoop the NameNode was a single point of failure for the distributed filesystem. Later versions of Hadoop have high availability with an active/passive failover for the “NameNode.”

Uses

MapReduce is useful in a wide range of applications, including distributed pattern-based searching, distributed sorting, web link-graph reversal, term-vector per host, web access log stats, inverted index construction, document clustering, machine learning, and statistical machine translation. Moreover, the MapReduce model has been adapted to several computing environments like multi-core and many-core systems, desktop grids, volunteer computing environments, dynamic cloud environments,[ and mobile environments. ]

At Google, MapReduce was used to completely regenerate Google’s index of the World Wide Web. It replaced the old ad hoc programs that updated the index and ran the various analyses.

MapReduce’s stable inputs and outputs are usually stored in a distributed file system. The transient data is usually stored on local disk and fetched remotely by the reducers.